Introduction

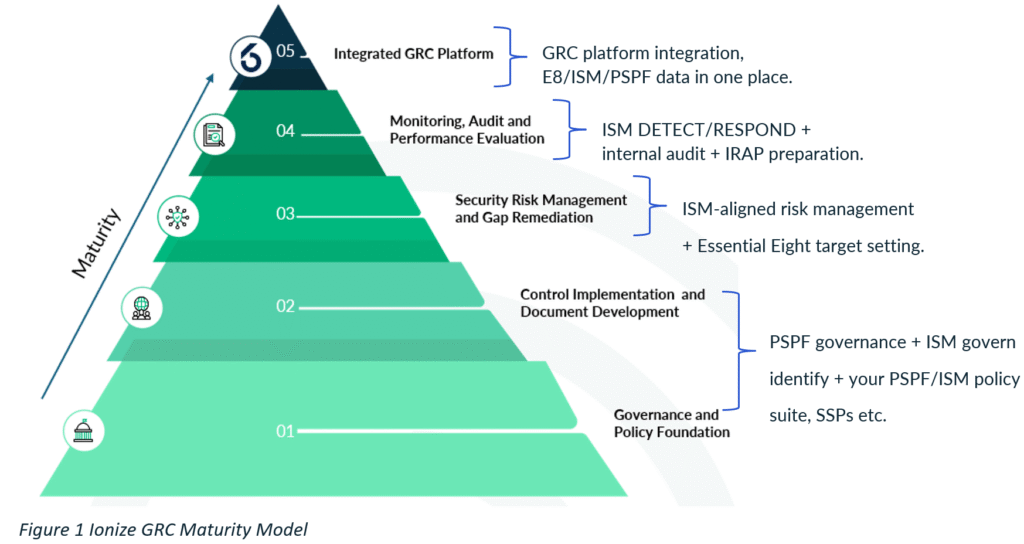

As Principal of the Ionize GRC team and CISO, I spend a lot of time with colleagues across government who are simultaneously managing PSPF, ISM, Essential Eight, IRAP, DISP, portfolio-specific directions and an ever-growing backlog of projects. The Ionize GRC Maturity Model is designed to bring structure and coherence to that complexity.

In this paper, I outline how I would apply the model to assess your current position and shape a cybersecurity program that is coherent, defensible and genuinely fundable.

A Single Framework for a Very Noisy Environment

The Ionize GRC Maturity Model is structured as five levels that describe how a security and GRC capability matures over time:

- Level 1 – Governance and policy foundation

- Level 2 – Control implementation and documentation (including SSPs, IRPs, DR/BCP and procedures)

- Level 3 – Risk management and gap remediation

- Level 4 – Monitoring, audit and performance evaluation

- Level 5 – Integrated GRC platform and continuous control monitoring.

Each Level is deliberately concrete. It is defined in terms of artefacts, roles, decision rights and behaviours, not abstract labels like “ad hoc” or “optimised”. It aligns to ISO 27001 and the reality of PSPF, ISM, Essential Eight and IRAP in the Australian Government context.

You can think of Levels 1–3 as building how you run security, Level 4 as proving it works overtime, and Level 5 as using platforms and automation to make it sustainable and visible.

Evaluating your current state with the model

To evaluate your current state, you treat those five levels as five lenses on the same security program – governance and policy, control implementation and documentation, risk management, assurance and monitoring, and integrated GRC and automation.

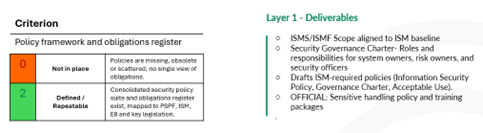

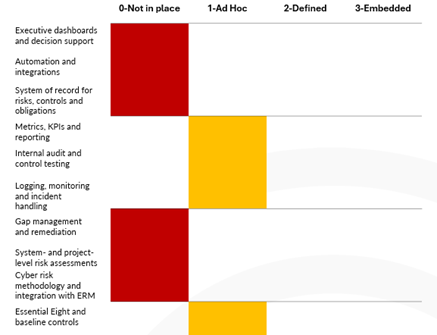

I recommend that you define a simple, consistent scale for each level. For example, from zero to three, where zero means not in place, one means ad hoc or inconsistent, two means defined and repeatable, and three means embedded and measured. You then run a structured assessment workshop with your direct reports and key stakeholders from governance, risk, architecture, SOC, operations, GRC and privacy, and work your way through the levels in turn. A detailed evaluation matrix is included at the end of this document.

Figure 2 Recommended Scale for Assessment

In the governance and policy level, you are evaluating the strength of your foundations. You examine whether there is a clearly defined ISMS and GRC scope aligned to PSPF and ISM obligations, whether roles and accountabilities are genuinely clear, whether security committees operate with meaningful charters, and whether your policy suite is consolidated, current and explicitly mapped to PSPF and ISM requirements. You also look for a single, maintained obligations register covering legislation, PSPF, ISM, Essential Eight, DISP and portfolio-specific directions, rather than tacit knowledge scattered across teams. The picture that emerges tells you how firm the ground floor really is.

Figure 3 Measuring your Foundations

In the control implementation and documentation level, the focus shifts from intent to practice. For your major systems, you assess the existence and quality of System Security Plans, incident response plans, disaster recovery and business continuity plans, and system operating procedures. You check whether these documents align with ISM expectations and the way systems are actually run, not just the way they were meant to be run when someone filled in a template three years ago. You review Essential Eight implementation against your stated target maturity levels and ask a simple question: how easily could we demonstrate control implementation and configuration to an IRAP assessor or an internal audit? This tells you whether your control environment is robust and evidence ready, or whether it relies on heroics and tribal knowledge.

In the risk management level, you look at how cyber risk is identified, analysed and tracked across the department. You examine whether there is a single, ISM aligned methodology that is used for projects, changes and enterprise risks; whether cyber risks appear coherently in the departmental risk register; and whether risk appetite and tolerance are articulated in a way that guides decisions, rather than just appearing in an annual report. You also check that Essential Eight and broader ISM gaps are captured as risks with explicit treatments, owners and timelines, rather than living only in technical reports. This shows you whether “risk-based” is a reality or a distant aspiration.

In the assurance and performance level, you evaluate your feedback loops. You examine how logging, detection and incident response line up with ACSC guidance and your own risk scenarios; how internal audits, control tests and Essential Eight spot checks are planned and executed; and how findings from IRAP, ACSC engagements and internal reviews are captured, prioritised and driven through to closure. You are looking for evidence that issues and weaknesses are managed within a disciplined assurance program, rather than treated as isolated fires.

Finally, in the integrated GRC and automation level, you look at how all of this is stitched together in systems and data. You determine whether there is a single system of record for risks, controls, obligations, incidents and audits; whether PSPF, ISM and Essential Eight reporting can be generated reliably from a common data set; and to what extent continuous control monitoring is in place for vulnerability management, logging coverage and Essential Eight telemetry. You also assess whether dashboards and reports based on that data are actively used by your executive and governance committees or produced solely for compliance.

When you pull this together ideally in a simple heatmap or narrative summary, you end up with a very clear picture level by level, where you are strong, where you are fragile, and where you are trying to operate at a higher level than your foundations support. That picture is far more useful than a standalone E8 score or PSPF rating.

Reconciling PSPF, ISM, Essential Eight and IRAP

Every Federal Government department is living with multiple forms of maturity reporting, PSPF self-assessments, Essential Eight maturity reporting to ACSC, ISM adoption expectations, IRAP reports on critical systems, and often portfolio specific requirements as well.

Figure 4- Level Assessment Heatmap

The Ionize model lets you do is put those reporting obligations into context.

If your Essential Eight maturity for patching and MFA is in stasis, you can ask whether that is truly a tooling problem, or whether it is hamstrung by weak standard baselines (Level 2), inconsistent risk prioritisation (Level 3) or the absence of meaningful metrics and ownership (Level 4). If your PSPF governance outcome is only “partially effective”, you can explicitly identify that as a Level 1 and 3 issue, rather than blaming tools or SOC processes in Level 4.

In other words, instead of having separate, sometimes contradictory scorecards, you have a single language for explaining why those scores look the way they do and what you intend to do to address the issues.

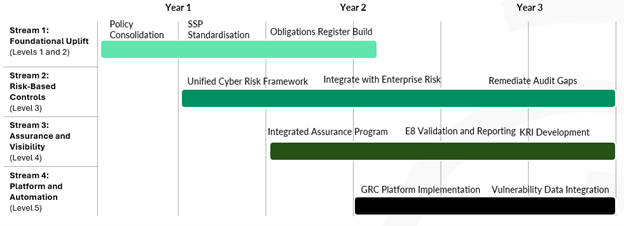

Turning diagnosis into a three-year roadmap

Once you have that level-by-level picture, the next step is to convert it into a roadmap.

I suggest that CISOs set realistic target levels by domain. You might decide that enterprise governance should reach Level 4 within three years, that high-risk or PROTECTED systems should target Levels 4–5, and that lower-risk business applications only need to solidify at Level 3. That immediately makes your program risk based and aligned with both ISM and Essential Eight guidance about tailoring controls to business criticality, rather than trying to do everything at once.

You then sequence initiatives according to dependency following a simple rule. Do not invest ahead of your level. If your governance is weak, fix that before rolling out a large GRC platform. If your controls are undocumented and inconsistent, address that before you invest into continuous monitoring. If you keep getting hammered in audits on the same themes, fix the underlying Level 2 and 3 issues rather than running more spot checks in Level 4.

From there, you group initiatives into a small number of streams within each level i.e. governance and policy consolidation, control and SSP standardisation, unified cyber risk framework and integration with enterprise risk, integrated assurance and Essential Eight validation, and then platform and automation once the foundations are in place. Each stream gets clear objectives, ownership, indicative timing and explicit links to your external obligations and internal pain points.

The result is a roadmap that reads logically upward through the levels, instead of a disconnected list of projects.

Figure 5 – Example High Level Roadmap

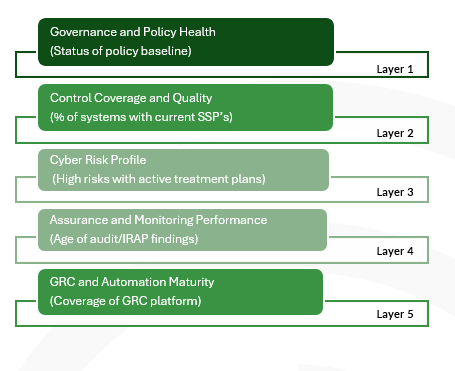

Embedding the levels in governance and reporting

The model really comes to the fore when you use it to reshape the way you report to the Secretary, the Executive Committee and the Audit and Risk Committee.

Rather than structuring your papers around technology silos (“network security”, “end-user computing”, “identity”, “projects”), you can frame them around the five levels. You provide a short narrative and a small set of indicators for each i.e. the health of your governance and policy baseline, the coverage and quality of your control documentation, the state of your cyber risk profile, the performance of assurance and monitoring, and the maturity of your integrated GRC and automation.

A set of carefully chosen measures under each level is usually enough. Examples could be the percentage of systems with current SSPs and IRPs, the proportion of high cyber risks with active treatment plans, the age and status of audit and IRAP findings, the spread of Essential Eight levels across key environments, the coverage of systems and controls in any GRC or monitoring platform.

Figure 6- Reporting on Capability Maturity

Presented this way, your program stops looking like a collection of technical initiatives and starts reflecting what it should be, the progressive construction of a resilient, auditable and transparent security capability.

Using the model with IRAP and ACSC

For systems heading toward IRAP assessment or increased ACSC scrutiny, the model helps you avoid wasting effort.

You can insist that any system be brought to at least a credible Level 3 with a clear governance context, documented controls and a coherent risk picture well before you send an IRAP assessor anywhere near it. Above that, you can aim to bring critical systems to Level 4 so that IRAP findings are naturally absorbed into your normal assurance and remediation machinery, instead of becoming separate, ad hoc workstreams every couple of years.

Similarly, you can take the inevitable shifts in Essential Eight guidance and maturity expectations and explain them through the levels. Which changes you must incorporate into Level 2 baselines, how they will be reflected in Level 3 risks and treatment plans, and how you will adjust Level 4 monitoring and validation to prevent regressions. That turns external assessments from surprises into inputs to your roadmap.

The value of the Ionize GRC Maturity Model for you as a CISO is that it gives you one coherent story that runs from where you are today to where you need to be. It lets you evaluate your current state in a structured, evidence-based way, to reconcile PSPF, ISM, Essential Eight and IRAP into a single picture, and then translate that picture into a practical, three-year roadmap that builds upwards through the levels. It gives you a clear, repeatable frame for how you talk about security with your Executive, and a disciplined way to turn IRAP and ACSC demands into planned work rather than constant disruption. Rather than a diagram on a slide the model becomes the organising logic for a security program that is risk-based, auditable and sustainable within the Federal Government context.

GRC Maturity Model Assessment

How to use the Matrix

- Pick the scope – whole department, or a specific environment (e.g. PROTECTED systems).

- For each row, ask your stakeholders which description (0–3) best matches reality today.

- Capture evidence or examples as you go (systems, documents, reports, incidents).

- Roll up scores per level (average or median) to see where you’re strongest and weakest.

Use the result to:

- Build a heatmap (levels vs criteria).

- Prioritise initiatives (e.g. fix Level 1/2 gaps before chasing Level 5 tooling).

- Explain to your Secretary and ARC why PSPF / ISM / E8 / IRAP outcomes look the way they do.

The Scoring Scale

Use the same scale for every row:

- 0 – Not in place

No consistent approach; nothing formal, or only in pockets. - 1 – Ad hoc / Fragmented

Some practices exist but are inconsistent, person-dependent, or undocumented. - 2 – Defined / Repeatable

Documented, approved and used in most relevant areas; not yet fully measured or optimised. - 3 – Embedded / Measured

Widely adopted, routinely measured, continually improved; survives staff turnover and audit scrutiny.

Level 1 – Governance and Policy Foundation

| Criterion | 0 – Not in place | 1 – Ad hoc / Fragmented | 2 – Defined / Repeatable | 3 – Embedded / Measured |

| ISMS / security scope and context | No clear statement of security scope; obligations and environments are implied, not written down. | Some scope statements exist (e.g. for individual systems), but they are incomplete, out of date or not used in decision-making. | ISMS / security scope is documented, approved and aligned with PSPF/ISM; used for audits and major decisions. | Scope is routinely reviewed, updated for organisational change and referenced in governance, risk and project processes. |

| Roles, accountability and committees | No defined CISO/CSO role or security governance structure; responsibilities are unclear. | Roles exist on paper and committees meet, but accountability gaps and overlaps are common. | Security roles, responsibilities and committees are defined, chartered and mostly followed in practice. | Roles and committees operate as designed; decisions, escalations and reporting are consistent and evidenced. |

| Policy framework and obligations register | Policies are missing, obsolete or scattered; no single view of obligations. | Mix of old and new policies; some alignment to PSPF/ISM/E8 but no authoritative register. | Consolidated security policy suite and obligations register exist, mapped to PSPF, ISM, E8 and key legislation. | Policies and obligations are kept current through a regular review cycle and used actively in risk, audit and project processes. |

Typical deliverables for a government security program at Level 1

- ISMS Scope Statement / System Boundary Diagram (text + architecture diagram).

- Information Security Policy (approved by executive) and associated policy register.

- Compliance Obligations Register (PSPF, ISM, Privacy Act, DISP mapping).

- Roles and Responsibilities Matrix (RACI) for security functions.

- Security Governance Charter / Terms of Reference for governance committees.

- Risk Management Framework & Risk Appetite Statement (methodology, scales, treatment options).

- Policy Review Schedule and Policy Owner Registry.

- Executive Briefing Pack (initial) outlining current posture, top risks, and required decisions/resourcing.

Level 2 – Control Implementation and Documentation

| Criterion | 0 – Not in place | 1 – Ad hoc / Fragmented | 2 – Defined / Repeatable | 3 – Embedded / Measured |

| System Security Plans (SSPs) and system documentation | SSPs and similar artefacts are largely absent or exist only for a few systems. | SSPs exist for some priority systems but are incomplete, outdated or inconsistent in structure and quality. | SSPs exist for all in-scope systems at required classifications, using a consistent template aligned to ISM. | SSPs are current, drive implementation decisions and are routinely used in IRAP, audit and change processes. |

| IRP / DR / BCP and playbooks | Incident response and recovery are informal; no documented processes or only high-level statements. | Some plans exist (e.g. cyber-IRP or DR) but they are not regularly tested or widely understood. | Documented IRP, DR and BCP plans exist, tested periodically with lessons incorporated for major systems. | Plans are integrated with enterprise crisis management; exercises are regular and drive continuous improvement. |

| Essential Eight and baseline controls | No coherent view of Essential Eight; implementation is opportunistic. | Some strategies are implemented (e.g. patching, AV) but maturity is inconsistent across environments. | Target maturity levels are defined; baselines and build patterns exist and are applied to most in-scope systems. | Essential Eight is monitored, exceptions are formally managed, and maturity levels are sustained or improving across the estate. |

Typical deliverables for a government security program at Level 2

- ISM Annex A — control list with implementation status and rationale.

- System Security Plan (SSP) — full system-level control descriptions and implementation evidence.

- Control Implementation Matrix — mapping controls → procedures → evidence → owners.

- Operational SOPs and Runbooks — patching, backups, access provisioning, change control.

- Configuration Baselines & Hardening Guides — standard images and hardening scripts.

- Logging & Monitoring Implementation Document — SIEM data sources, retention, alerting rules.

- Supplier / Third-Party Control Evidence Pack — cloud provider attestations, vendor SOC reports, contractual security clauses.

- Statement of Residual Risk / Exceptions Register — documented, approved exceptions with compensating controls.

Level 3 – Security Risk Management and Gap Remediation

| Criterion | 0 – Not in place | 1 – Ad hoc / Fragmented | 2 – Defined / Repeatable | 3 – Embedded / Measured |

| Cyber risk methodology and integration with ERM | No consistent cyber risk method: risks are described inconsistently or only in technical language. | A method exists but is used inconsistently; cyber risks are partially represented in the enterprise risk register. | A documented, ISM-aligned cyber risk methodology is used for projects, systems and enterprise risks; cyber risk is integrated with ERM. | Risk criteria, appetite and tolerance are actively used in decisions; cyber risk is regularly discussed at executive and ARC level. |

| System- and project-level risk assessments | Risk assessments are rare or only done for major procurements. | Some systems and projects undergo assessments, but coverage and quality are inconsistent. | Key systems and projects have recorded risk assessments, with treatments linked to control implementation. | Risk assessments are systematic, kept current and drive prioritisation of remediation and funding decisions. |

| Gap management and remediation (ISM / E8 / IRAP) | Gaps are known informally but not tracked; remediation is reactive and uncoordinated. | There are lists of findings and issues, but ownership, timelines and status are opaque. | Gaps from audits, IRAP and E8 assessments are tracked in a register with assigned owners and due dates. | Gaps are prioritised by risk, tracked to closure, and trend information is used to refine controls and strategy. |

Typical deliverables for a government security program at Level 3

- Security Risk Management Plan (SRMP) — governance, methodology, roles.

- System-level Risk Register — scored risks, residual risk after controls.

- Enterprise Risk Register — aggregated strategic risks and trends.

- POA&M / Remediation Backlog — prioritised actions, owners, timelines.

- Risk Treatment Worksheets — decision records & executive approvals for accepted risks.

- Remediation Project Charters — for major uplift efforts (e.g., patching sprint, privileged access overhaul).

- Supplier Risk Assessments — for critical vendors with remediation plans.

- Evidence of Risk Reduction — after remediation: test results, configuration snapshots, updated control attestations

Level 4 – Monitoring, Audit and Performance Evaluation

| Criterion | 0 – Not in place | 1 – Ad hoc / Fragmented | 2 – Defined / Repeatable | 3 – Embedded / Measured |

| Logging, monitoring and incident handling | Limited logging and monitoring; incident detection and handling are mostly manual and ad hoc. | Some centralised logging or SOC function exists, but coverage is patchy, and playbooks are inconsistent. | Logging, monitoring and incident response are defined for key systems and aligned to ACSC and ISM expectations. | Detection and response are measured, tuned and regularly tested; coverage is deliberate and driven by risk. |

| Internal audit and control testing | Internal audit rarely touches cyber; no structured control testing program. | Audits occur but scope is opportunistic, and findings are addressed on a best-effort basis. | A planned audit and control testing program exists, covering key control domains and high-risk systems. | Audit and testing results are integrated with risk and remediation processes; themes drive structural improvements. |

| Metrics, KPIs and reporting | Minimal or purely technical metrics; little linkage to risk or obligations. | Some metrics exist (e.g. incidents, vulnerabilities) but they are not consistently reported or trusted. | A defined set of KPIs/KRIs covers key aspects of governance, risk, controls and incidents; reported regularly to governance forums. | Metrics are accurate, automated where possible and drive decisions, prioritisation and resource allocation. |

Typical deliverables for a government security program at Level 4

- Security monitoring and incidents

- Security monitoring / SOC plan (scope, log sources, retention, responsibilities)

- SOC runbooks and incident playbooks

- Evidence of centralised logging and monitoring coverage

- Security incident register and significant incident PIRs / lessons learned

- Regular vulnerability and patch management reports and exceptions register

- Audit, review and control testing

- Annual cyber / internal audit and assurance plan (ISM / PSPF aligned)

- Completed internal audit and IRAP reports with agreed actions

- Control testing evidence (access reviews, config checks, E8 validation, etc.)

- Consolidated register of findings / non-conformities with owners and due dates

- Performance, risk and governance reporting

- Routine security governance packs for the Exec Committee / ARC (ISM, PSPF, E8, IRAP status)

- Cyber risk dashboard linked to enterprise risk register

- Defined and tracked KPIs/KRIs (incidents, vuln SLAs, findings age, etc.)

- Governance minutes showing decisions, risk acceptance and reprioritisation

- Continuous improvement

- Corrective action / continuous improvement register

- Exercise plans and post-exercise reports (cyber, BCP/DR)

- Updated policies, standards and procedures driven by findings and lessons learned

Level 5 – Integrated GRC Platform and Continuous Control Monitoring

| Criterion | 0 – Not in place | 1 – Ad hoc / Fragmented | 2 – Defined / Repeatable | 3 – Embedded / Measured |

| System of record for risks, controls and obligations | Information is spread across spreadsheets, documents and inboxes; no single source of truth. | A GRC or similar tool exists but only supports a narrow use case (e.g. incidents or risks) and is poorly adopted. | A core GRC platform is implemented for selected domains (risks, controls, obligations, audits), with agreed data structures. | The platform is the accepted source of truth across domains; data quality is managed, and the tool is integrated with other systems. |

| Automation and integrations (e.g. E8, vuln, logs) | No automated feeds: data is entered manually and infrequently. | Some integrations or imports exist but are one-off or unreliable. | Key telemetry (vulnerabilities, asset inventories, some E8 metrics) is fed into the platform regularly. | Continuous control monitoring is in place for priority controls; automated data drives dashboards and alerts used by management. |

| Executive dashboards and decision support | Executives receive ad hoc or highly technical reports that are hard to interpret. | Some dashboards exist but focus on tools or technology, not risk and obligations. | Regular dashboards present PSPF/ISM/E8 posture, risks and trends in business language to Executive Committee and ARC. | Dashboards are trusted and routinely used to make decisions, set priorities and support external reporting. |

Typical deliverables for a government security program at Level 1

- GRC platform as system of record

- Configured GRC platform with agreed data model for risks, controls, obligations, incidents, audits

- On-boarded systems, registers and workflows (risk, issue, findings, exceptions, approvals)

- Data quality rules and ownership defined (stewards, reconciliations, completeness checks)

- Integrations and data feeds

- Automated feeds from vulnerability scanners, asset inventories, identity systems, ticketing tools, SIEM/SOC

- Integration with enterprise risk, project/portfolio and HR systems where relevant

- Continuous control monitoring (CCM)

- Defined CCM use cases and mapped controls (e.g. E8, privileged access, patch SLAs, logging coverage)

- Automated control checks with thresholds, alerts and exception workflows

- CCM result dashboards showing pass/fail trends and coverage across systems

- Executive dashboards and decision support

- Role-based dashboards for CISO, Executive Committee and ARC (PSPF/ISM/E8 posture, risks, incidents, findings, trends)

- Regular use of these dashboards in governance forums, with decisions and actions recorded

- Reporting and external alignment

- Standardised, repeatable reports for PSPF, ACSC / Essential Eight, IRAP remediation tracking and portfolio bodies

- Automation and workflow

- Automated routing of incidents, findings, exceptions and approvals to the right owners

- Reminder and escalation workflows for overdue risks, actions and attestations

- Periodic policy / control attestations executed and recorded through the platform

For further information or a (no obligation) briefing on our GRC service offering and capability, please contact Ionize at sales@ionize.com.au

END